: An Investigative Analysis

In the rapidly advancing realm of artificial intelligence, self-learning neural networks are emerging as a transformative technology that promises to redefine machine autonomy, efficiency, and adaptability. From autonomous vehicles to personalized medicine, these systems possess an remarkable capacity for continuous learning without explicit supervision. this article delves into the intricate mechanisms, challenges, and innovations shaping the future of self-learning neural networks, tailored for developers, engineers, researchers, founders, and investors who seek a granular yet thorough understanding of this seminal AI frontier.

Understanding Self-Learning Neural Networks: Beyond Traditional AI Paradigms

Defining self-Learning in Neural Architectures

Self-learning neural networks are a class of AI systems that autonomously improve their performance by discovering patterns and adapting their parameters in dynamic, frequently enough ambiguous environments, without relying solely on labeled datasets.Unlike traditional supervised neural networks constrained by pre-annotated training data, these models harness unsupervised, semi-supervised, or reinforcement learning principles combined with meta-learning to refine their cognitive representations.

Key Differentiators from Conventional Models

The hallmark attributes of self-learning networks include continual learning, self-optimization, and the ability to extrapolate learned knowledge to novel contexts. Architecturally, they integrate feedback loops, intrinsic motivation mechanisms, and adaptive rewards that allow iterative self-improvement—capabilities critical in complex real-world applications such as autonomous robotics or financial forecasting systems.

Innovation in dynamic feedback mechanisms is transforming self-learning neural networks into resilient systems capable of evolving with changing data distributions and task complexities.

Architectural Foundations Driving Self-Learning Neural Networks

Core Components and Data Flows

The architecture of self-learning neural networks typically comprises multiple interconnected modules: perception layers, memory units, decision-making circuits, and meta-learning layers. Perception extracts features from unstructured input, memory modules (e.g.,external memory or neural Turing machines) enable retention and retrieval of previously learned representations,and meta-learners orchestrate parameter tuning based on internal or external feedback.

Role of Neuroplasticity and Dynamic Connectivity

Inspired by biological brains, artificial neuroplasticity mechanisms facilitate adaptive synaptic modification and dynamic connectivity adjustments, allowing networks to reconfigure pathways in response to new facts. These adaptive circuits crucially minimize catastrophic forgetting and enhance lifelong learning efficacy.

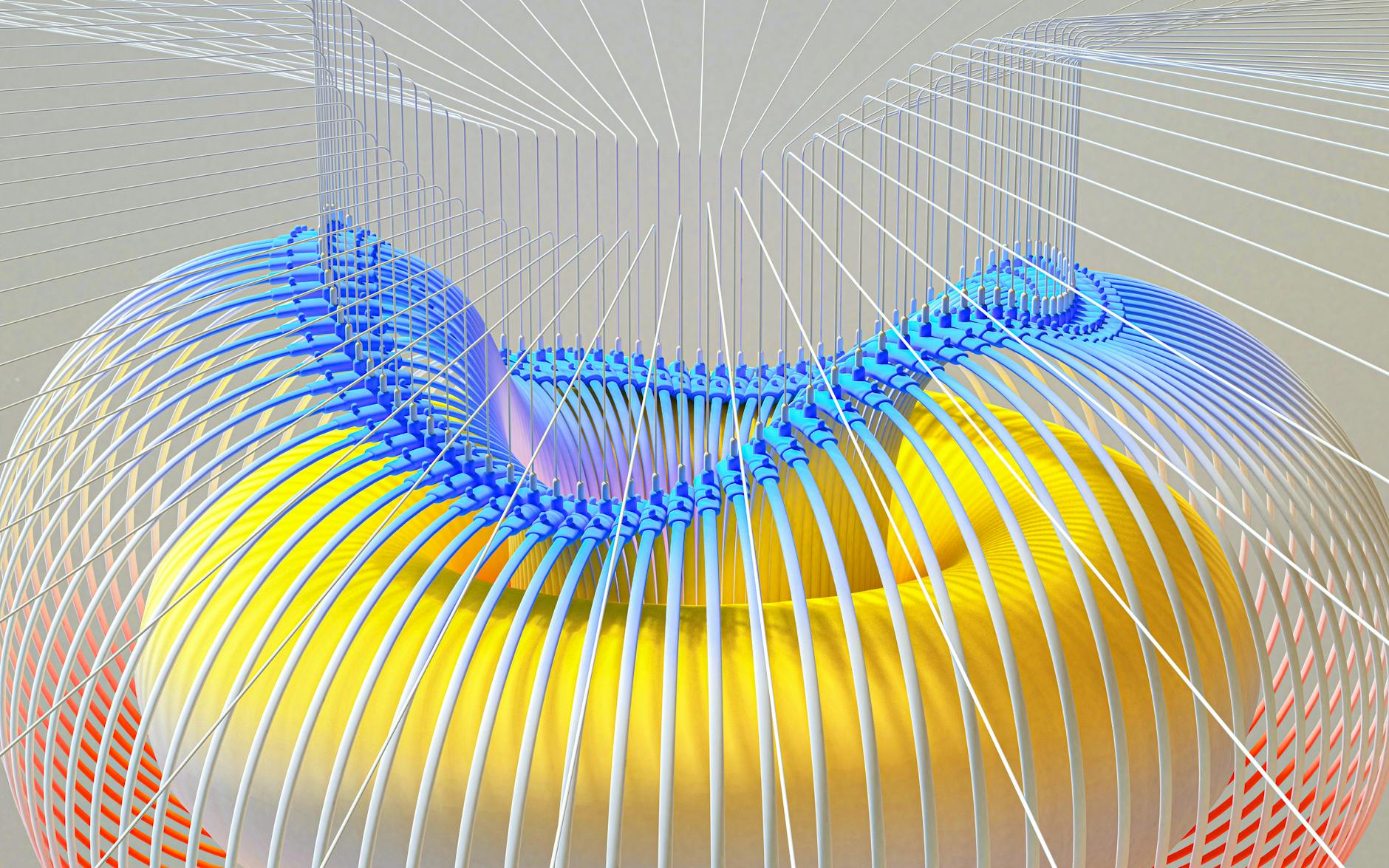

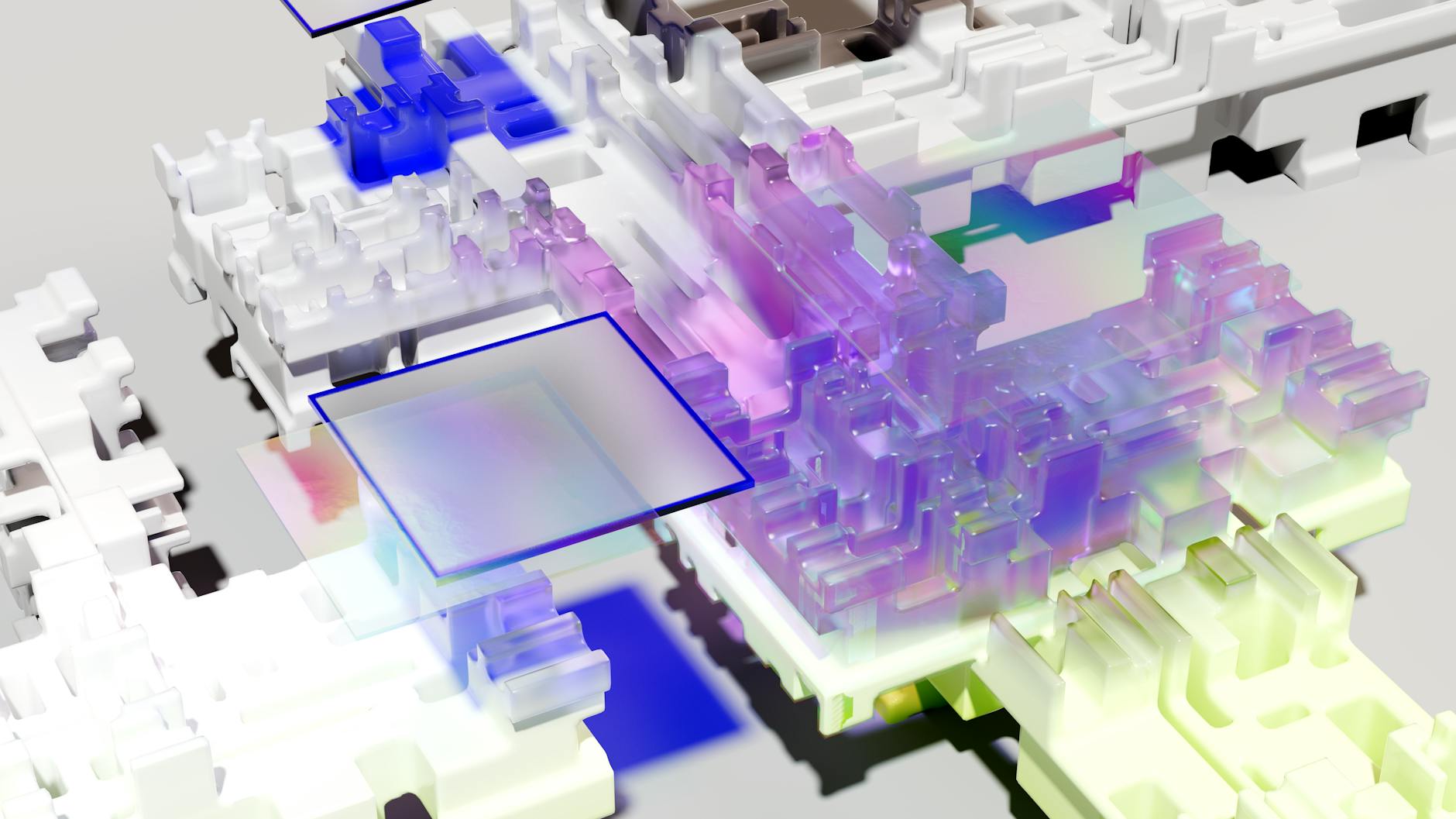

Conceptual Architecture Visualization

Advanced Algorithms Propelling Self-Learning Neural Networks Forward

Unsupervised and Self-Supervised Learning techniques

Self-supervised learning,a paradigm where data itself generates supervisory signals,plays a pivotal role in self-learning networks. Contrastive learning, predictive coding, and generative pretext tasks underpin contemporary advances, enabling networks to build robust feature hierarchies without external labels. This fosters generalizability and reduces data acquisition costs significantly.

Reinforcement Learning and Intrinsic Motivation

by mimicking reward-based learning observed in humans and animals, reinforcement learning algorithms provide self-learning systems with evaluative signals that drive decision-making improvements. Novel intrinsic motivation frameworks, such as curiosity-driven exploration and information gain maximization, equip neural models with autonomous objectives, augmenting adaptability in opaque or sparse reward environments.

Meta-Learning: Learning to Learn

Meta-learning endows neural networks with the ability to evolve their own learning strategies, optimizing hyperparameters dynamically and accelerating adaptation to unforeseen tasks. This learning-to-learn approach is crucial in developing systems that persistently refine themselves and require minimal human intervention over time.

Challenges in Scaling Self-Learning Neural networks

Addressing Catastrophic Forgetting

One of the predominant hurdles is catastrophic forgetting, where new information overwrites prior knowledge. Advanced rehearsal techniques, regularization strategies, and memorization buffers are critical to mitigating this risk, but remain active research areas requiring innovation.

computational Costs and Efficiency

Scaling self-learning networks to industrial workloads demands efficient training algorithms and energy-conscious hardware. Sparse connectivity, quantization, and neuromorphic computing approaches serve as promising avenues to balance model complexity and operational efficiency.

Ensuring Robustness and Safety

Self-learning mechanisms inherently risk unexpected model behaviors due to feedback loops and autonomous decision-making. Rigorous evaluation frameworks, safety constraints, and interpretability layers are pivotal to ensuring these systems remain reliable and ethical in deployment.

Emerging Hardware Technologies Facilitating self-Learning at Scale

Neuromorphic Chips: Bridging Biology and Silicon

Neuromorphic computing architectures, designed to emulate neurobiological structures and signal processing, offer highly efficient platforms optimized for self-learning neural networks. Their event-driven processing and fault tolerance facilitate continuous adaptive learning without prohibitive energy costs.

Edge AI and On-Device learning

With the proliferation of IoT and mobile devices, edge AI hardware tailored for self-learning allows real-time, privacy-preserving training at the data source.This decentralization reduces latency and bandwidth requirements while enhancing autonomy.

FPGA and ASIC Acceleration

Field-programmable gate arrays (FPGAs) and application-specific integrated circuits (ASICs) are increasingly customized to support adaptive neural architectures, offering hardware-level adaptability to iterate learning protocols and optimize throughput.

The role of Explainability and Interpretability in Self-Learning Systems

Why Openness Matters in Autonomous Learning

As neural networks increasingly operate without human supervision, designers must ensure that self-learning mechanisms remain interpretable. Explainability not only fosters trust─essential for adoption in sensitive industries like healthcare and finance─but also aids in debugging and regulatory compliance.

Techniques for Interpreting Self-Learned Representations

Layer-wise relevance propagation, attention visualization, and surrogate model analysis illuminate internal decision pathways, even in networks evolving autonomously. These tools help stakeholders validate network reasoning and detect emergent biases or failure modes early.

Innovation in interpretability frameworks is transforming how developers and users validate and control self-learning neural network behaviors, bridging the gap between autonomy and oversight.

Applications Propelling Industrial Adoption of Self-Learning Neural Networks

Autonomous Systems and Robotics

Robots equipped with self-learning neural networks exhibit enhanced adaptability in unstructured environments,from manufacturing lines that self-optimize to drones that autonomously navigate changing terrains. This flexibility reduces downtime and accelerates operational scale.

Personalized Medicine and Genomics

In healthcare,self-learning models digest complex biological data to yield personalized treatment plans and early disease predictions,dynamically refining their accuracy with longitudinal patient monitoring.

Financial Forecasting and Algorithmic Trading

Finance harnesses self-learning networks for real-time market trend analysis and risk management, enabling neural models to evolve strategies responsive to novel market stimuli with minimal human oversight.

Evaluating the Performance Metrics of Self-Learning Neural Networks

Continuous Learning KPIs

Beyond conventional accuracy and precision, assessment metrics include adaptation speed, stability over time, sample efficiency, and robustness to distributional shifts. Monitoring these KPIs is critical for benchmarking progress in self-learning systems.

Robustness and Generalization Evaluations

Stress tests involving adversarial inputs and novel environments validate the neural network’s ability to generalize learned knowledge. Metrics such as out-of-distribution accuracy and catastrophic forgetting rates guide iterative growth.

Ethical, Privacy, and Security Considerations in Autonomous Neural Learning

Safeguarding Data Privacy in Continuous Learning

Self-learning models must respect data sovereignty and privacy by design. Techniques such as federated learning and differential privacy mitigate risks, enabling collaborative yet confidential learning.

Mitigating Bias and Ensuring Fairness

Autonomous adaptation may inadvertently propagate or amplify biases present in uncurated data. Proactive fairness auditing, unbiased training protocols, and inclusive dataset design remain integral safeguards.

Controlling Autonomous Behaviors to Prevent Harm

Rigorous validation pipelines and ethical guardrails must monitor emergent behaviors in uncontrolled environments, particularly where self-learning models influence high-stakes decisions.

Open Source Frameworks and Tools Powering Self-learning Research and Development

Leading Libraries and Platforms

Frameworks such as TensorFlow, PyTorch,and Ray RLlib are pivotal for prototyping and scaling self-learning models, offering rich apis for reinforcement and meta-learning.

Datasets and Benchmarks

Popular benchmarks like openai’s Meta-World, Meta-Dataset, and NIST neural datasets provide standardized environments to validate continual and unsupervised learning performance.

Investment Trends and market Outlook for Self-Learning Neural Networks

Funding Dynamics and Startup Ecosystems

Global investment in autonomous AI capabilities surged beyond $4 billion in 2023, concentrated in sectors like autonomous vehicles, bioinformatics, and predictive analytics. Startups pioneering self-learning technology are attracting strategic partnerships with cloud providers and semiconductor manufacturers.

Strategic Corporate Initiatives

Tech giants like Google DeepMind, NVIDIA, and openai are spearheading research hubs and incubators that accelerate breakthroughs, fostering an ecosystem that increasingly blurs research and commercial deployment boundaries.

future Directions: Toward Truly Autonomous Neural Intelligence

Integrating Symbolic Reasoning with Neural Adaptivity

Hybrid AI approaches that combine symbolic logic with self-learning neural processes promise to surmount limitations in abstract reasoning and causality, enabling more explainable and generalizable intelligence.

Quantum Computing and Neural Network Synergies

Quantum neural networks represent a nascent yet promising direction where quantum principles could exponentially accelerate self-learning computations, though practical implementations remain exploratory.

Collective and Federated lifelong Learning

Distributed self-learning systems functioning as collective intelligences could leverage cross-organizational insights while maintaining privacy, dramatically expanding the scope and impact of adaptive AI.